Give thy thoughts no tongue . . .

. --Shakespeare (Hamlet, I, 3)

Evolution.

1. Collectively, those modules, centers, and circuits of the

brain that developed ca. 4 million-to-200,000 years ago in members of the

genus, Homo. 2. Specifically, those areas of the

primate forebrain, midbrain, and hindbrain adapted

for a. emotional communication,

b. linguistic communication, c.

sequential planning, d. tool-making, and

e. rational thought.

Usage I: The human brain is both verbal (see SPEECH and WORD) and nonverbal. Sometime between 4 million and 200,000 years ago (anthropologists are not sure when) human beings began to speak. And yet, despite the immense power of words, nonverbal signals are still used a. to convey emotions, feelings, and moods; and b. to express the highs and lows of social status. Moreover, vocalizing itself--perhaps because speech and manual signing co-evolved--is accompanied in every culture by a panoply of palm-up, palm-down, pointing, and mime cues. (N.B.: Mime cues pantomime shapes, relationships, and concepts largely unexpressed until Homo set foot in Nonverbal World.)

Usage II: Incredibly little is new in the human brain that cannot be

found (on a simpler scale) in the aquatic, amphibian, reptilian, mammalian, and primate

brains preceding it. Yet, from a nonverbal-communication perspective, what sets our brain apart are those highly

specialized areas which control fine motor movements of the fingers,

lips, and tongue, all of which evolved as neurological "smart

parts."

Intellectual digits. Areas of neocortex empowered

members of the genus Homo to move their fingers through complex

sequences of steps resulting in the manufacture, e.g., of Oldowan stone

tools (see ARTIFACT). Following up perhaps 200,000 years ago,

early members of Homo sapiens moved their fingers, lips, and tongues in

a parallel, sequenced manner to communicate about the manufacture of

tools and artifacts. By mirroring the process, i.e., by pantomiming it through

patterns of articulation (manual as well as vocal), language was

born.

Mental imagery. The brain creates its own nonverbal imagery

(i.e., "sees" without external visual input, through the "mind's eye") by

activating ". . . the dorsal (area 19) and ventral (fusiform gyrus) visual

association areas, superior and inferior parietal cortex, as well as other

nonvisual cortices such as the dorsolateral prefrontal cortex and angular gyrus"

(Miyashita 1995:1719).

Right brain, left brain I. Studies agree

that as nonverbal cues are sent and received, they are more strongly influenced

by modules of the right-side neocortex (esp. in right-handed individuals) than

they are by left-sided modules. Anatomically, this is reflected a. in the

greater volume of white matter (i.e., of myelinated axons which link

nerve-cell bodies) in the right neocortical hemisphere, and b. in the

greater volume of gray matter (i.e., of nerve cell bodies or neurons) in

the left. The right brain's superior fiber linkages enable its neurons to better

communicate with feelings, memories, and senses, thus giving this side its

deeper-reaching holistic, nonverbal, and "big picture" skills. The left brain's

superior neuronal volume, meanwhile, allows for better communication among the

neocortical neurons themselves, which gives this side a greater analytic and

intellectually narrower "focus" (see, e.g., Gur et al. 1980). Research by UCLA

neuroscientist, Daniel Geschwind and colleagues shows that left-handers have

more symmetric brains, due to genetic control; the sides are more equal than

those in brains of right-handers (March 11, 2002 article in Proceedings of

the National Academy of Sciences).

Right brain, left brain II.

Communication problems due to deficits in the usually dominant left-brain

hemisphere include Broca's aphasia and ideomotor apraxia. Problems in the

usually nondominant right-brain include aprosody, inattention to one side of the

body (hemi-inattention), visuospatial disorders, and affective agnosia. The

dominant hemisphere produces, processes, and stores individual speech sounds.

The nondominant hemisphere produces and processes the intonation and melody

patterns of speech (i.e., prosody; see TONE OF

VOICE).

Right brain, left brain III. To assist in the

production and understanding of nonverbal cues, fiberlinks of white matter

connect modules of neocortex within the right-brain hemisphere. Preadapted

white-matter fibers link modules within the left-brain hemisphere, as well, to

assist in the production and understanding of speech. 1. Axon cables make

up short, U-shaped association tracts which link adjacent neocortical

gyri. 2. Longer, thicker association-tract cables link more distant

modules and lobes within each hemisphere. Linguistically, the key cable is the

superior longitudinal fasciculus. It links the temporal lobe's area 22

and the frontal lobe's area triangularis to the angular gyrus and the

supramarginal gyrus of the parietal lobe. The best known part of this important

communications cable is the left-brain's arcuate fasciculus (see

VERBAL CENTER).

Supplementary motor cortex

(SMC). 1. "Stimulation of the supplementary motor cortex can produce

vocalization or complex postural movements, such as a slow movement of the

contralateral hand in an outward, backward, and upward direction. This hand

movement is accompanied by a movement of the head and eyes toward the hand"

(Willis 1998:215). 2. Imaging studies reveal that merely thinking about a

body movement activates the supplementary motor cortex; the subsequent movement

itself activates both the latter area and the primary motor cortex (Willis

1998:216). [Author's note: We hypothesize that PET studies of linguistic

tasks showing SMC activity that is unrelated to speech may reveal gestural

fossils ("ghosts") of movements humans once used to communicate apart from

(i.e., before the advent of) words themselves.]

Neuro-notes I.

The jump from posture, facial expression, and gesture to sign language and

speech was a quantum leap in evolution. And yet, the necessary brain areas (as

well as the necessary body movements) were established hundreds of millions of

years before our kind arrived in Nonverbal World.

Neuro-notes II. To the primate brain's hand-and-arm gestures, our brain added precision to fingertips by attaching nerve fibers from the primary motor neocortex directly to spinal motor neurons in charge of single muscle fibers within each digit. Direct connections were made through the descending corticospinal tract to control these more precise movements of the hand and fingers.

Neuro-notes III. With practice we can thread a needle, while our closest animal relative, the chimpanzee, cannot. No amount of practice or reward has yet trained a chimp to succeed in advanced tasks of such precision; the primate brain simply lacks the necessary control.

Neuro-notes IV. As our digits became more precise, so did our lips and tongue. These body parts, too, occupy more than their share of space on the primary motor map (see HOMUNCULUS).

Neuro-notes V. Using the mammalian tongue's food-tossing

ability as a start, our human brain added precision to the tongue tip just as it

did to the fingertips. Nerve fibers from the primary motor cortex were linked

directly to motor neurons of the hypoglossal nerve (cranial XII) in

charge of contracting individual muscle fibers within the tongue. Direct

connections were made through the descending corticobulbar tract to

precisely control movements of the tongue tip needed for

speech.

Neuro-notes VI. Humans are what they are today because

their ancestors followed a knowledge path. At every branch in the

500-million-year-old tree of vertebrate evolution, the precursors of humanity

opted for brains over brawn, speed, size, or any lesser adaptation. Whenever the

option of intelligent response or pre-programmed reaction presented itself, a

single choice was made: Be smart.

See also NONVERBAL BRAIN.

Copyright 1998 - 2022 (David B. Givens/Center for Nonverbal Studies)

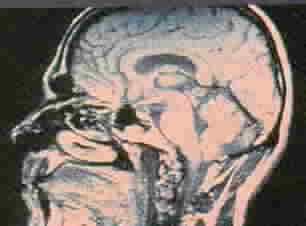

Photo of imaged brain (from Kandel et al. 1991; copyright 1991 by Appleton & Lange)